Primate Pose Estimation

Designed a deep learning model using rCNNs and CPM for the OpenMonkeyChallenge to estimate monkey poses in natural habitats. It tracks 17 monkey pose landmarks, with its accuracy measured by MPJPE. The model achieved an MPJPE of 0.217, enhancing wildlife monitoring and behavioral studies through AI.

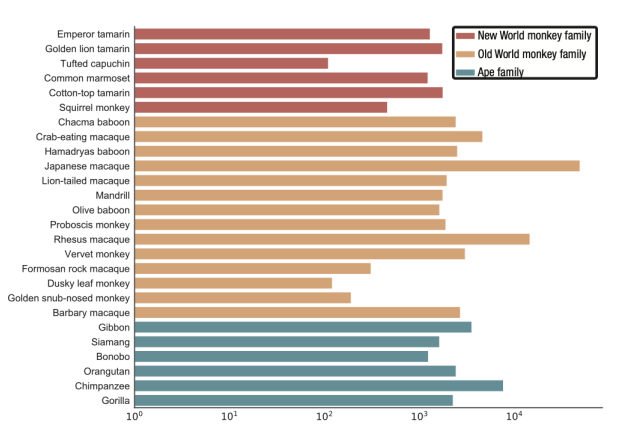

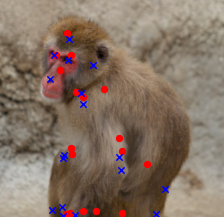

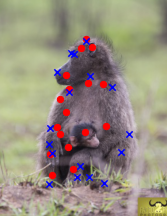

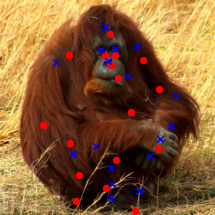

This project aims at estimating the pose of Non-Human Primates (NHP) in different environment settings. This projects particularly aims to approach the OpenMonkeyChallenge. OpenMonkeyChallenge is a computer vision benchmark challenge for NHP pose estimation. The data for this challenge consists of over 100,000 annotated photographs of NHPs in naturalistic contexts obtained from various sources for various species of monkeys and apes. The pose of the monkey is determined by 17 key pose landmarks including Nose, Left eye, Right eye, Head, Neck, Left shoulder, Left elbow, Left wrist, Right shoulder, Right elbow, Right wrist, Hip, Left knee, Left ankle, Right knee, Right ankle, and Tail. The dataset has been made publicly available by Yao et. Al..

This project has been taken as part of Computer Vision (University of Minnesota, Fall 2022) coursework under Dr. Junaed Sattar.

This dataset is split into training, validation and test datasets (60%, 20%, and 20% respectively)

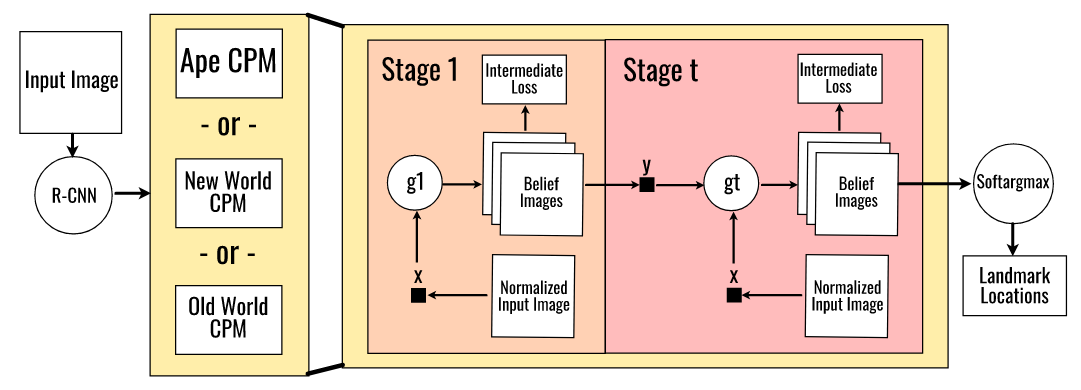

The baseline for my method is based upon Wei et. al. who use the Convolutional Pose Machine (CPM) to estimate the landmark locations in humans. CPM is divied into 3 stages that generate 18 belief images (17 for landmarks + 1 for background). These belief images convey the confidence that a landmark is at a location.

The diverse anatomy and behaviour of non-human primate species make generalizing the relationships between image and context features for pose estimation difficult. To handle the differences between species I trained a CPM on each class of the monkey species (Old World, New World and Ape). Non-human primates are classified into one of the classes using a rCNN, and the corresponding CPM will estimate the landmark locations for that species.

The proposed and baseline method’s efficacy in detecting the poses of the images were evaluated based on its MPJPE and PCK. Overall, the model achieves comparable overall performance to the baseline. Additionally, the model gave better results for Apes and New World monkeys but could not outperform the baseline for Old World monkeys.

The performance of our method could be further improved by performing additional data augmentation to limit the number of poor training samples (multiple or occluded non-human primates). Additionally, the number of stages in each CPM could be raised to increase the range of learned context features. Finally, more samples of New World Monkeys and Apes could be added so that Old World Monkeys do not dominate the dataset.